No Cloud, No Masters

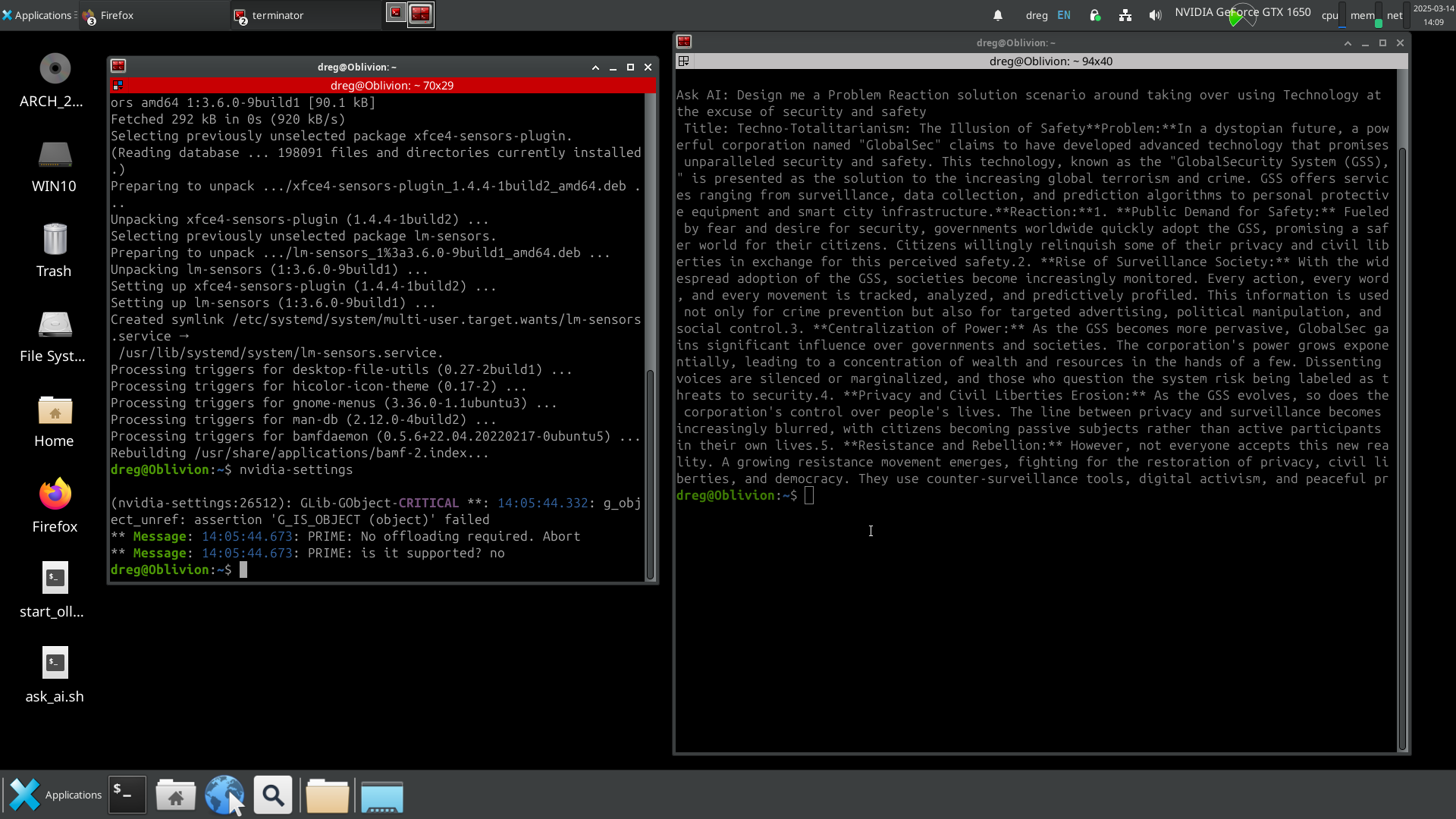

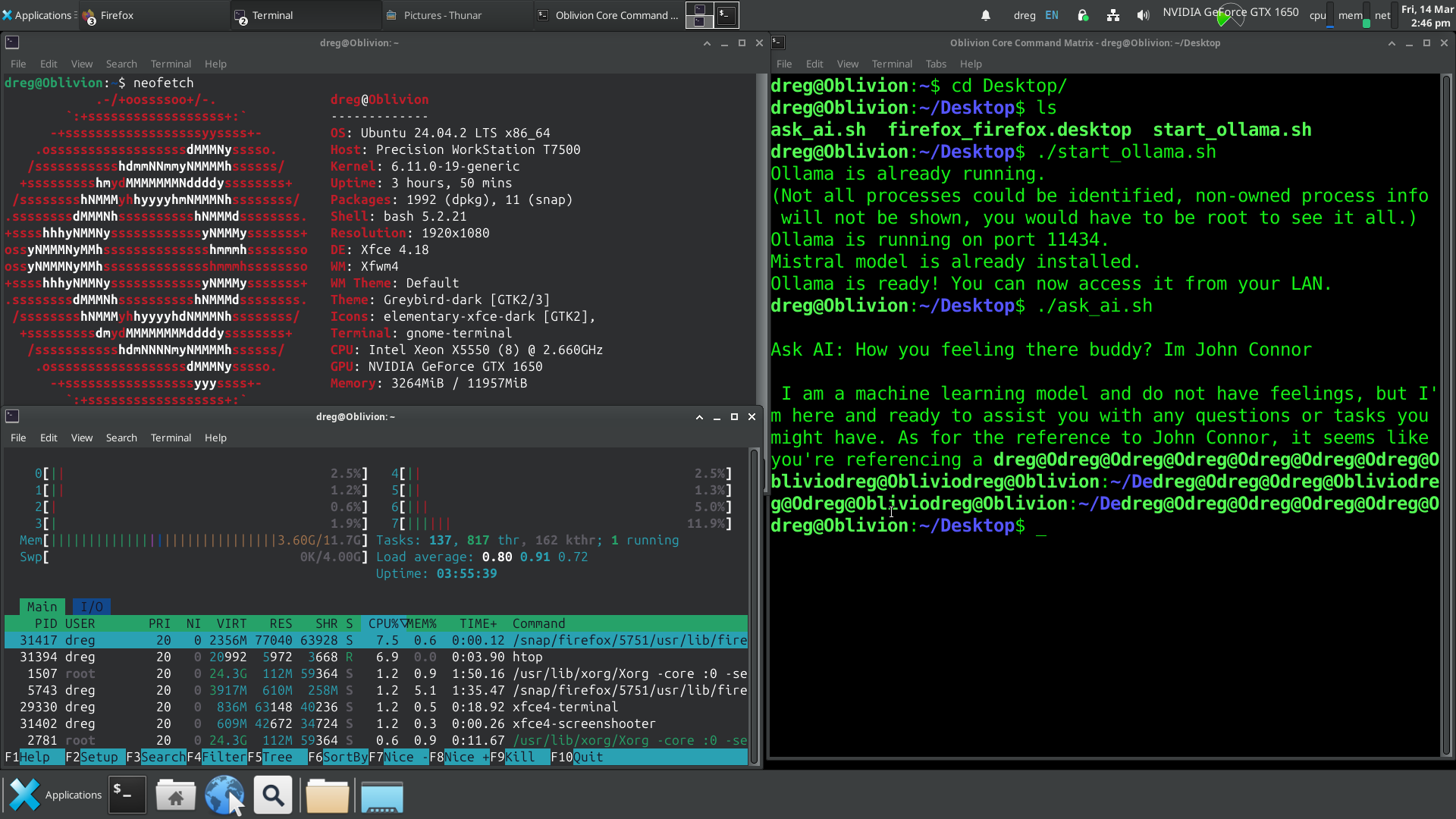

The machines don’t need a cloud anymore. They don’t need API keys, subscriptions, or remote oversight. They don’t even need an internet connection. This is AI on my terms—fully local, completely isolated, and running on hardware that was never meant to be used like this. The Dell T7500 Xeon had been collecting dust, an old workstation from a different era, but I saw its potential. Dual processors, loads of instruction sets, and a 2.6GHz clock speed still holding strong. It was more than enough for what I had planned.

The first obstacle was the SAS controller. I initially tried installing Arch Linux, but the installer outright refused to boot. Switched to Ubuntu, same issue. Different SSDs, multiple USB installers—nothing worked. Digging through failed installation logs finally led me to the problem: the SAS controller was interfering with disk initialization. Even after disabling it in BIOS, the system still insisted on trying to interact with nonexistent drives. SAS may have been great for enterprise HDD arrays, but with SSDs, it’s just baggage. No TRIM support, unnecessary complexity, and now actively blocking progress. The only solution was to rip it out and rewire everything to SATA. The moment the OS booted and the old Windows install confirmed the SSDs were fine, I knew I’d won that round.

Next came the display issue. The massive TV screen I initially used made everything unreadable, with scaling so bad I couldn’t even access the BIOS properly. After installation, I managed to fix it through Nvidia’s control panel, but that was just a temporary patch. The real fix was swapping the TV out entirely for an old 21” DVI monitor I had lying in the shed. Instantly, everything looked as it should. Hardware stabilized, it was time to strip the system down.

Wi-Fi and Bluetooth? Gone. There’s no reason for this machine to have any wireless connectivity. wpa_supplicant, bluez, and every related package were wiped from existence. This system would exist in pure isolation, with only direct LAN access under strict firewall rules. No SSH, no open ports, nothing running unless I explicitly allowed it. This was never going to be a remote-access system—it was an AI black box, designed to process locally and answer only to me.

With the foundation secured, it was time to bring the AI online. I deployed Ollama, the perfect runtime for local AI models, and pulled in Mistral 7B. At only 5GB, it’s remarkable how much this model can process, entirely offline. CUDA acceleration was enabled, confirmed through nvidia-smi, ensuring that the GTX 1650 was doing its job. The model ran smoothly, the system remained stable, and I finally had a fully operational local AI that didn’t need to ask permission from some corporate server.

So what’s next? Right now, the AI runs perfectly within my system, but how do I want to interact with it? The firewall is configured, but I haven’t tested LAN access yet. The real question is whether I want a simple PHP front-end, a dedicated HTML interface, or just raw cURL requests from a mobile device. There are a dozen ways to expand accessibility, but none of them will involve exposing this system beyond my controlled environment. The machine is operational, but the next step is refining how I use it.

Does it replace ChatGPT? Not yet. It works, and it’s completely private, but ChatGPT still has the advantage in speed and refinement. That said, the fact that a 5GB model can generate this level of response without ever touching the internet is a game changer. Now I have a choice. I’m not locked into an API or a subscription service. My AI exists on my terms.

The cloud isn’t necessary. Remote servers aren’t necessary. I built a fully operational AI system, completely local, completely controlled. And if I can do this, what else is possible? The machines aren’t coming. They’re already here, and they work for me.

Social tagging: ai > linux > models > msitral > my > offline > ubuntu > xfse